Alright. So a couple of days ago I got a little railed up and got carried away. I think that I did capture what I intuitively felt, my gut feeling. The analysis came shortly after the blog post was already published. This is not uncommon in me, to let my intuition guide me, but find an analytical justification.

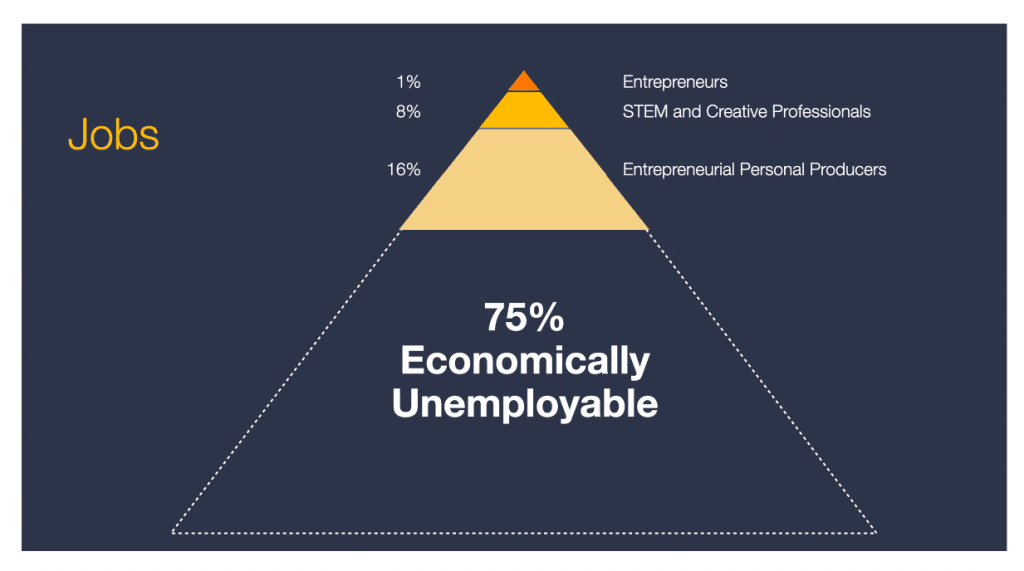

So what is THIS article about? Well, I have been hearing doomsday scenarios about the eventual destruction of humanity because of the emergence of a superior artificial intelligence and I wanted to weigh them out. I just saw one a couple of days ago that was illustrated with this less-than-hopeful chart:

No bueno. Droves of hungry people on the streets. Revolutions. And at minimum a toilet paper run.

Now, there is not a single scenario out there. All of them are some version of a dystopian future, but with important differences. Three of them are notable to point out (the names are my own):

- KingAI: AI is sponsored by a government, and we all become slaves of it.

- Robber Baron AI: AI is sponsored by a corporation, and we all become mindless consumers of what it produces.

- 2001 HAL AI: AI goes runaway, and tries to wipe out the human race because of some unintended algorithmic convergence.

And I am adding a fourth one:

- Bored AI: Humans become pets.

Each of these assumes that the human race will play a different “role”. So for the first two ones, the human race has to become either a subject, or a consumer.

For the first scenario, if we assume that our new machine overlords can do EVERYTHING better than a person (this would include your job, and mine, my Uber Eats drivers’, Doña Juanita’s tortillas, and even your personal trainer), then what does an AI need us for? What does it need subjects for? Technically -in this scenario- we cannot “serve” it, because other AIs and Robots can do it better, cheaper, faster. The same goes if the AI is under the control of some individual or secret cabal (had to drop that in, for virality). Because of this, there is no incentive to build an AI that turns us into its subjects.

For the second scenario, let’s assume that the AI is developed by some evil corporation. They will want us to buy their services and goods, which they can do better, cheaper, faster. But sorry AI, we have no jobs, cannot buy squat. Again, there is no incentive to build an AI that turns us into mindless consumers.

Let’s talk about the last one and we will address evil, morally confused AI last. The scenario of becoming “pets” may seem the least harmful. So this works out to something that some people have proposed. We create so much resources and abundance, that people don’t need to work, and the AI can provide us with some form of universal basic income (a salary that we don’t have to work for) or some version of bottomless soup bowl and infinite refills of your favorite beverage (unlimited Latte refills appeals even to me!). We eventually become pets. Yes, we jut hang around, eat, sleep, and yes, go for walks, but the problem here is that us humans would be terrible at this. We are not particularly cute, and don’t jump up like my dog does when she sees me, regardless of what she was doing. I mean, I can get home at two AM, she may be sleeping, and she greets me as effusively as when I walk in through the door on a Friday afternoon. I cannot imagine a human doing that. Maybe a roll-over, but not that. So again, no incentive to turn us into pets.

The runaway AI is a risk, but we are assuming they will eventually become smarter than our own collective, and they will -to some extent- be our “offspring”. I don’t have an incentive-based answer for this, as this will come down to a moral and values-based decision that the AI will take. The best answer I have for this is the following: first, we need several independent AIs. Cannot allow everything to be concentrated in one single entity, and also, belligerent countries may find a special advantage if they develop an AGI faster. But in addition to this, the answer is not in restricting the AI, but in the training. David Deutsch is clear on this one:

“The way you prevent a child from becoming a new Hitler is just to explain to them why Hitler’s ideas were bad. It’s not really controversial that they are bad, and therefore it’s really very perverse to think that the only way we can stop an AGI from becoming a Hitler is to cripple it. By the way, I don’t think such crippling is possible.”

Going back to the incentives conversation. I don’t have clarity around how the future will look like exactly, but I know that the doomsday scenarios are not likely, because the incentives don’t align. Something tells me that the most likely scenario will be one of abundance, empowerment of every human being, and generation of new value. There are still lots of things that have to play out.